In today’s competitive digital landscape, data-driven decision-making is paramount. A/B testing has emerged as a crucial tool for optimizing websites, apps, and marketing campaigns. This comprehensive guide will equip you with the knowledge and skills needed to master A/B testing and unlock the secrets to significantly improving your conversion rates and overall business performance. Whether you are a seasoned marketer or just starting out, understanding the nuances of A/B testing, from formulating hypotheses to analyzing results, is essential for achieving online success. This guide will delve into the intricacies of designing effective A/B tests, choosing the right metrics, and interpreting data to arrive at the winning formula for your specific goals.

From defining clear objectives and selecting the right A/B testing tools, to understanding statistical significance and avoiding common pitfalls, this comprehensive guide covers all aspects of successful A/B testing. We will explore advanced techniques for segmenting your audience, personalizing experiences, and iteratively refining your A/B testing strategy. Learn how to leverage the power of data to make informed decisions and unlock the full potential of your online endeavors. Discover the winning formula for optimizing your digital presence through methodical and data-driven A/B testing practices, ultimately leading to improved user engagement, higher conversions, and increased revenue.

What is A/B Testing and How Does it Work?

A/B testing, also known as split testing, is a method of comparing two versions of a webpage, email, or other marketing asset to determine which performs better. It involves showing two variants (A and B) to similar audiences and tracking key metrics to see which version achieves a higher conversion rate, click-through rate, or other desired outcome.

The process typically works as follows: You create two versions of your content, with one element changed – the “variable.” This could be a headline, call to action, image, or even the layout. Then, a portion of your audience is randomly shown version A, while the remaining portion sees version B. Data is collected on how each group interacts with the variants. Finally, the results are analyzed to determine which version performed better based on pre-defined metrics. The winning version is then implemented.

A/B testing helps you make data-driven decisions, optimizing your marketing efforts and maximizing your return on investment. By systematically testing different elements, you can understand what resonates with your target audience and refine your strategies accordingly.

Key Principles of Effective A/B Testing

Effective A/B testing relies on a few key principles to ensure reliable results and meaningful improvements. Focusing on a clear hypothesis is paramount. Before starting a test, define a specific problem and a proposed solution you believe will improve a metric. This focused approach ensures the test is purposeful and results are actionable.

Sample size is crucial. A small sample size can lead to misleading results due to random variations. Adequate sample size ensures statistical significance, providing confidence that observed differences are real and not due to chance. Determine the necessary sample size based on the expected effect size and desired level of confidence.

Test one variable at a time. Changing multiple elements simultaneously makes it difficult to isolate which change influenced the results. Testing individual variables provides clear insights into the impact of each change, leading to more accurate conclusions and effective optimization.

Finally, be patient and let the test run its course. Ending a test prematurely or extending it beyond the planned duration can skew the results. Predetermined test durations, based on projected traffic and desired statistical power, prevent skewed data and contribute to more reliable conclusions.

Essential Tools for A/B Testing

Choosing the right A/B testing tools is crucial for efficient experimentation and accurate data analysis. Several platforms cater to various needs and budgets.

Google Optimize offers a robust free version integrated with Google Analytics, making it a popular choice for beginners. It allows for easy A/B testing of website elements and personalized experiences.

Optimizely is a comprehensive platform with advanced targeting and analytics features, ideal for larger organizations with complex testing requirements. Its visual editor and statistical tools make it a powerful solution.

VWO (Visual Website Optimizer) provides a user-friendly interface with advanced features like multivariate testing and behavioral targeting, suitable for businesses looking for in-depth experimentation and personalization.

AB Tasty focuses on user experience optimization and offers features like session recording and heatmaps, providing insights into user behavior alongside A/B testing functionality.

Selecting the right tool depends on your specific goals, technical capabilities, and budget. Consider factors like ease of use, integration with existing analytics platforms, and the level of customization required when making your choice.

Setting Up Your First A/B Test

Setting up your first A/B test involves a systematic approach to ensure accurate and meaningful results. Begin by clearly defining your objective. What are you hoping to achieve with this test? Increased conversion rates? Higher click-through rates? A clearly defined objective will guide your entire testing process.

Next, identify the element you want to test. This could be a headline, a call-to-action button, an image, or any other element on your webpage. Only test one element at a time to isolate its impact effectively. Create a variation (Version B) of this element. Ensure the change is distinct enough to potentially impact user behavior.

Choose your A/B testing tool. Several platforms offer user-friendly interfaces for managing your tests. Once selected, integrate the tool with your website. Define your target audience and split the traffic equally between the original version (Version A – the control) and the variation (Version B). Finally, set a timeframe for the test, allowing sufficient time to gather statistically significant data.

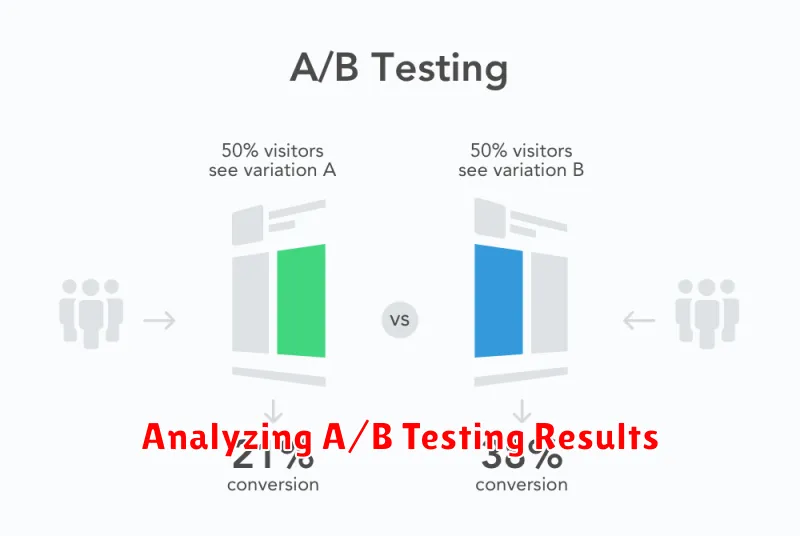

Analyzing A/B Testing Results

Analyzing your A/B testing results is the crucial final step in determining the winning variation. This involves more than just looking at which version had a higher conversion rate. Statistical significance plays a vital role in ensuring the observed differences aren’t due to random chance.

Use a statistical significance calculator or A/B testing software to determine if your results are statistically significant. A common threshold is 95% confidence, meaning there’s a 95% probability that the observed difference is real and not due to chance.

Consider the sample size of your test. A larger sample size generally leads to more reliable results. Also, evaluate the duration of your test. Running the test for an adequate period ensures you capture variations in user behavior across different days and times.

Finally, look beyond just the primary metric. Analyze secondary metrics to understand the broader impact of the changes. For example, if your primary metric is click-through rate, you might also examine bounce rate and time on page to gain a more complete understanding of user behavior.

Common A/B Testing Mistakes to Avoid

A/B testing, while powerful, can be easily misused. Avoiding common pitfalls is crucial for obtaining reliable results and maximizing your return on investment. Here are some frequent mistakes to watch out for:

Testing Too Many Elements Simultaneously

Changing multiple elements at once makes it impossible to isolate the impact of each change. Test one variable at a time to understand its true effect.

Insufficient Sample Size

A small sample size can lead to statistically insignificant results. Ensure you have a large enough sample to confidently determine the winning variation.

Stopping the Test Too Early

Ending a test prematurely can skew the results. Allow the test to run its full duration to account for fluctuations in user behavior.

Ignoring Statistical Significance

Don’t declare a winner based solely on gut feeling. Rely on statistical significance to ensure the observed changes are not due to random chance.

Not Defining Clear Goals

Start with a clear hypothesis and measurable goals. Without them, you won’t know what you’re trying to achieve or how to measure success.

Best Practices for A/B Testing

Implementing A/B testing effectively requires careful planning and execution. Following best practices ensures reliable results and maximizes your chances of discovering winning variations.

Define Clear Objectives

Clearly define your goals before starting any A/B test. What are you trying to achieve? Increased conversion rates? Higher click-through rates? Having a specific, measurable objective will guide your testing process.

Focus on One Element at a Time

Test only one variable at a time. Changing multiple elements simultaneously makes it impossible to isolate the impact of each individual change. This focused approach ensures accurate attribution of results.

Test with a Sufficient Sample Size

Ensure your test runs long enough to gather a statistically significant sample size. This avoids drawing misleading conclusions from small fluctuations in data. A larger sample size provides greater confidence in the observed results.

Analyze and Iterate

Thoroughly analyze the results of your A/B tests. Identify the winning variation and document the reasons for its success. Use these insights to inform future tests and continually optimize your strategies.

Advanced A/B Testing Techniques

Once you’ve mastered the basics of A/B testing, exploring advanced techniques can unlock further optimization potential. Multivariate testing (MVT) allows you to test multiple variations of several elements simultaneously, identifying the best combination for optimal performance. This is more complex than standard A/B testing but provides richer insights.

Bandit testing, another advanced method, dynamically allocates traffic to winning variations as results emerge. This minimizes exposure to underperforming versions and accelerates optimization. Consider this approach for rapid testing and iterative improvements.

Factorial testing helps understand the interactions between different elements. By testing combinations of variations, you can uncover synergistic effects that simple A/B testing might miss. This technique provides a more comprehensive view of how elements interact.

Finally, applying personalization based on user segments allows you to tailor experiences and further refine your optimization strategy. By analyzing user behavior and demographics, you can create targeted variations for specific groups.

Staying Ahead in the World of A/B Testing

The landscape of A/B testing is constantly evolving. To maintain a competitive edge, staying informed about the latest trends and advancements is crucial.

Continuous learning is key. Seek out reputable resources like industry blogs, forums, and conferences to stay abreast of new methodologies and best practices. Explore emerging technologies and tools that can enhance your A/B testing capabilities.

Experimentation is also vital. Don’t be afraid to test new ideas and approaches. The world of A/B testing rewards those who are willing to push boundaries and challenge conventional wisdom.

Embrace a culture of data-driven decision-making throughout your organization. Foster collaboration between teams and ensure that insights gleaned from A/B testing are shared and implemented effectively.