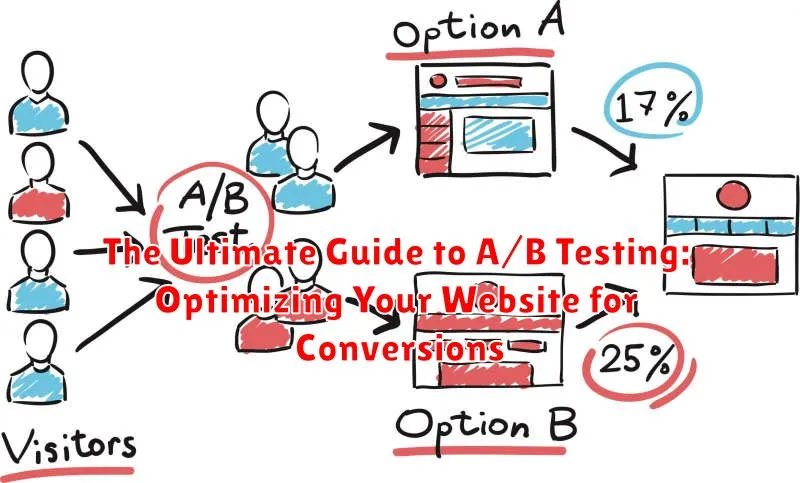

Are you ready to unlock the full potential of your website and skyrocket your conversions? A/B testing, also known as split testing, is a powerful technique that empowers you to make data-driven decisions to optimize your website’s performance. This ultimate guide to A/B testing will equip you with the knowledge and strategies necessary to conduct effective experiments and achieve significant improvements in your key metrics, such as conversion rates, click-through rates, and overall user engagement. Whether you’re a seasoned marketer or just starting out, this comprehensive guide will provide you with actionable insights and practical tips to transform your website into a high-converting machine.

In the fiercely competitive digital landscape, simply having a website is not enough. To truly thrive, you must continuously optimize and refine your website to ensure it effectively converts visitors into customers. A/B testing offers a systematic approach to website optimization, allowing you to test different variations of your web pages and determine which version performs better. This guide will delve into the intricacies of A/B testing, covering everything from formulating a robust hypothesis to analyzing results and implementing winning variations. Learn how to identify key areas for improvement, create compelling variations, and measure the impact of your changes with statistical significance. By mastering the art of A/B testing, you can gain a competitive edge and achieve sustainable growth in your online endeavors.

Defining Your A/B Testing Goals

Before diving into an A/B test, clearly define your objectives. What are you hoping to achieve? A well-defined goal is crucial for measuring success and ensuring your test yields actionable insights. Start by identifying a key performance indicator (KPI) you want to improve.

Common KPIs include:

- Conversion Rate: The percentage of visitors who complete a desired action (e.g., purchase, sign-up).

- Click-Through Rate (CTR): The percentage of visitors who click on a specific element (e.g., button, link).

- Bounce Rate: The percentage of visitors who leave a page without interacting.

- Average Order Value (AOV): The average amount spent per order.

Once you’ve selected a KPI, set a specific, measurable, achievable, relevant, and time-bound (SMART) goal. For example, instead of aiming to “increase conversions,” aim to “increase the conversion rate of the landing page by 10% within the next month.”

Documenting your goals ensures everyone involved understands the purpose of the test and the expected outcome. This clarity helps focus your efforts and facilitates accurate analysis of the results.

Choosing the Right A/B Testing Tools for Your Needs

Selecting the appropriate A/B testing tool is crucial for successful experimentation. The right tool will streamline your workflow and provide valuable insights. Consider these factors when evaluating different options:

Ease of Use: A user-friendly interface is essential, especially for beginners. Look for intuitive navigation and clear reporting dashboards.

Integration: Seamless integration with your existing analytics and marketing platforms simplifies data collection and analysis.

Features: Different tools offer varying levels of functionality. Consider features like visual editors, segmentation capabilities, and advanced statistical analysis.

Pricing: A/B testing tools range from free options to enterprise-level platforms with substantial costs. Choose a solution that aligns with your budget and testing needs.

Scalability: As your website grows, your testing needs may evolve. Select a tool that can scale to accommodate increased traffic and more complex experiments.

Setting Up Your A/B Testing Experiment

Setting up your A/B test correctly is crucial for obtaining reliable results. This involves several key steps to ensure a valid and impactful experiment.

First, define your target audience. Clearly identify the specific segment of users who will be part of your experiment. This ensures the changes you test are relevant to the users you aim to influence.

Next, determine your sample size. A sufficiently large sample size is essential for statistically significant results. Use a sample size calculator to determine the appropriate number of participants needed based on your desired confidence level and margin of error.

Randomization is paramount. Ensure your A/B testing software randomly assigns users to either the control group (seeing the original version) or the variant group (exposed to the modified version). This eliminates bias and ensures a fair comparison.

Finally, set a clear timeframe for your experiment. Running the test for an appropriate duration allows for enough data collection to reach statistically significant conclusions, while avoiding unnecessarily long testing periods.

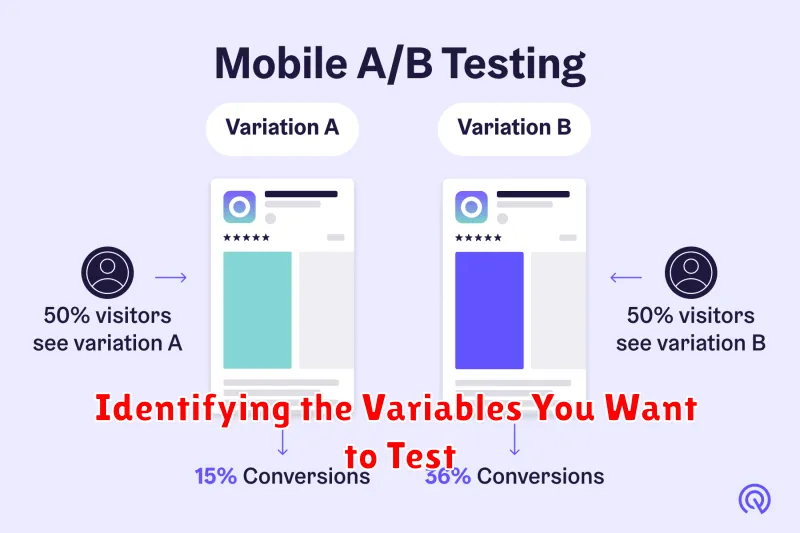

Identifying the Variables You Want to Test

A crucial step in A/B testing is pinpointing the specific variables on your website that impact conversions. Choosing the right elements to test is essential for meaningful results. Don’t try to test everything at once. Focus on key areas likely to influence user behavior.

Consider elements related to your webpage’s Call to Action (CTA). This could include the CTA button’s color, size, text, or placement. Changes to these variables can significantly affect click-through rates.

Headline modifications also offer substantial testing potential. Experiment with different phrasing, length, and font sizes to see what resonates best with your audience. Testing images and other visual content can also reveal valuable insights into user preferences.

Form length and fields are other important variables. Simplifying forms by reducing the number of required fields can often improve conversion rates. Finally, experimenting with different layout and navigation structures can impact user experience and, subsequently, conversions.

Creating Your Variations

Once you’ve identified the variable you want to test, the next step is creating your variations. This involves designing alternative versions of your chosen element, each embodying a distinct approach to achieving your desired outcome. It’s crucial to ensure that your variations are distinct enough to generate measurable differences in performance. Subtle changes might not yield statistically significant results.

Consider the following when crafting your variations:

- Best Practices: Research industry best practices and competitor strategies for inspiration.

- User Feedback: Incorporate user feedback and pain points into your variation design.

- Creativity: Don’t be afraid to experiment with different approaches and think outside the box.

For example, if you are testing the call-to-action button, you might create variations with different wording (e.g., “Get Started” vs. “Learn More”), colors, or sizes. If you’re testing the headline, you might experiment with different value propositions or emotional appeals.

Remember, each variation should have a clear hypothesis associated with it. This will help you analyze the results and draw meaningful conclusions about the impact of your changes.

Running Your A/B Test and Collecting Data

Once you’ve established your hypothesis and designed your variations, the next crucial step is running your A/B test and collecting data. This phase requires careful planning and execution to ensure the integrity of your results. A key consideration is selecting the right A/B testing tool. There are numerous platforms available, each with varying features and capabilities.

After choosing a tool, you’ll need to implement your variations and define your target audience. Ensure traffic is split evenly between the control and variation(s). It’s essential to let the test run for a sufficient duration to gather a statistically significant sample size. This helps minimize the risk of making decisions based on random fluctuations. During the testing period, monitor key metrics like conversion rates, bounce rates, and time spent on page.

Accurate data collection is paramount to making informed decisions. Your A/B testing tool will track the performance of each variation against your defined goals. Ensure the data being collected is relevant and reliable. Any discrepancies or errors in data collection can significantly impact the outcome of your test.

Analyzing Your Results and Making Data-Driven Decisions

Once your A/B test has run its course and gathered sufficient data, the next crucial step is to analyze the results. This involves determining whether the observed differences between your variations are statistically significant.

Statistical significance indicates whether the observed changes are likely due to the changes you implemented, rather than random chance. Various statistical tests, such as the chi-squared test or t-test, can help determine significance. Choosing the right test depends on the type of data you are analyzing (e.g., conversion rates, continuous data).

Look for a confidence level, typically set at 95%, which represents the probability that your results are accurate. A higher confidence level reduces the risk of making decisions based on misleading data. Alongside significance, consider the magnitude of the change. Even if statistically significant, a small improvement might not be worth implementing if it requires significant resources.

Utilize data visualization tools to present your findings clearly and effectively. Graphs and charts can help illustrate the performance of each variation and make it easier to identify trends and patterns. This clear presentation is crucial for communicating results to stakeholders and justifying decisions.

Implementing the Winning Variation

Once your A/B test has reached statistical significance and you’ve identified the winning variation, it’s time to implement it on your website. This stage requires careful planning and execution to ensure a smooth transition and to preserve the integrity of your site.

The implementation process will vary depending on the nature of your test and the platform you’re using. For example, changes to website copy can often be implemented directly within your content management system (CMS). More complex changes, like alterations to site structure or functionality, might require developer involvement.

Quality assurance testing is crucial after implementing the winning variation. Thoroughly test the implemented changes on different devices and browsers to ensure they function correctly and display as intended. This helps avoid introducing unexpected bugs or user experience issues.

After implementation, continue monitoring key metrics. While the A/B test indicated a positive outcome, real-world performance might vary. Track conversion rates, bounce rates, and other relevant metrics to ensure the winning variation continues performing as expected. Be prepared to make minor adjustments if necessary.

Continuously Testing and Optimizing Your Website

A/B testing isn’t a one-time activity. The digital landscape is constantly evolving, and user behavior changes over time. To maintain optimal website performance, embrace an ongoing cycle of testing and optimization.

After implementing the winning variation from one test, begin planning your next. Continuously monitoring key metrics like conversion rates, bounce rates, and time on page will reveal new areas for potential improvement. This iterative process allows you to make incremental gains that compound over time, resulting in significant improvements to your website’s overall effectiveness.

Don’t be afraid to retest previous variations. A variation that lost in a past test might perform better in the current environment due to changes in user behavior or market trends. Regularly revisit past tests and explore new hypotheses.

Develop a structured testing roadmap, prioritizing tests based on potential impact and feasibility. This roadmap should be a dynamic document, adapting to your evolving business goals and website performance. By incorporating continuous A/B testing into your website optimization strategy, you’ll stay ahead of the curve and maximize your website’s conversion potential.